Hi there!

I'm a fourth year PhD student at UC San Diego in the Center for Visual Computing, advised by Prof. Tzu-Mao Li and Prof. Ravi Ramamoorthi. My research interests are in solving various problems in inverse graphics, particularly in differentiable and inverse rendering. I also have some work on real-time rendering and GPUs and have written plenty of other software.

I have been fortunate to intern at NVIDIA Research (2024), working with Thomas Müller, Merlin Nimier-David, and Alex Keller, and at Meta Reality Labs (2023), working with Stephane Grabli, Olivier Maury, Matt Chiang, Christophe Hery, and Doug Roble.

I received my BASc in Computer Engineering at UBC, where I had the pleasure of working with Prof. Toshiya Hachisuka, Prof. Derek Nowrouzezahrai, and Prof. Tor Aamodt.

In my free time, I enjoy cooking dishes from all around the world.

Feel free to send me an email if you'd like to chat!

Publications

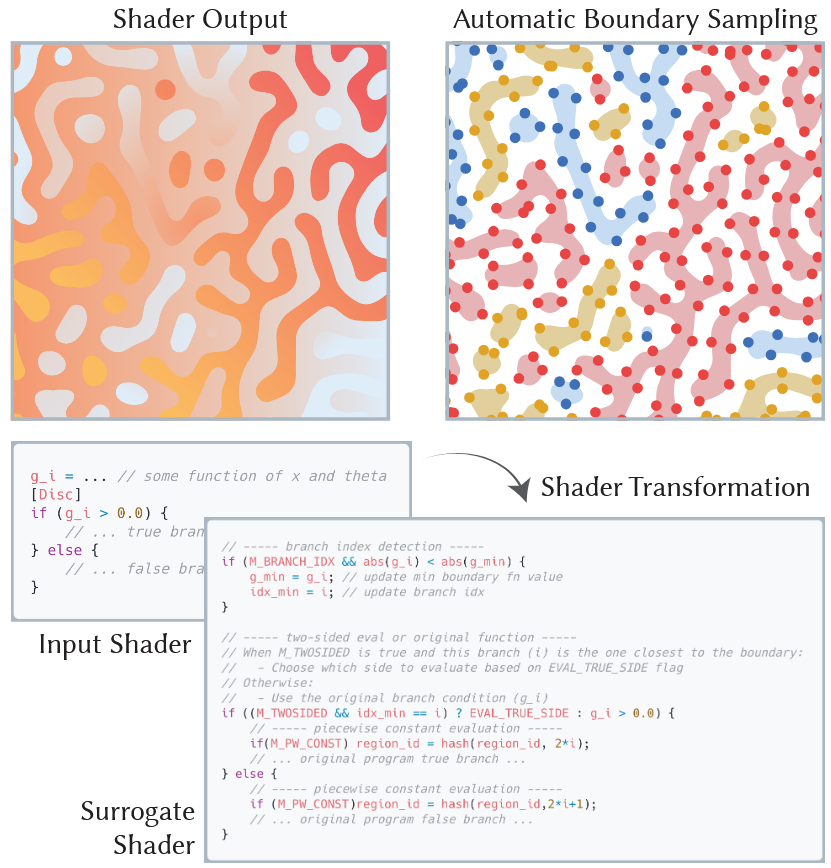

Automatic Sampling for Discontinuities in Differentiable Shaders

Yash Belhe, Ishit Mehta, Wesley Chang, Iliyan Georgiev, Michaël Gharbi, Ravi Ramamoorthi, and Tzu-Mao Li

ACM Transactions on Graphics (Proceedings of SIGGRAPH Asia 2025)

Best Paper Award

Many tasks in graphics and vision require computing derivatives of integrals of discontinuous functions, which have previously either required specialized routines or suffered from high variance. We introduce a program transform and boundary sampling technique that computes accurate derivatives for arbitrary shader programs, enabling a range of applications such as painterly rendering, CSG, rasterization, discontinuous textures, and more.

Transforming Unstructured Hair Strands into Procedural Hair Grooms

Wesley Chang, Andrew L. Russell, Stephane Grabli, Matt Jen-Yuan Chiang, Christophe Hery, Doug Roble, Ravi Ramamoorthi, Tzu-Mao Li, and Olivier Maury

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2025)

Recent methods have been developed to reconstruct 3D hair strand geometry from images. We introduce an inverse hair grooming pipeline to transform these unstructured hair strands into procedural hair grooms controlled by a small set of guide strands and artist-friendly grooming operators, enabling easy editing of hair shape and style.

Vector-Valued Monte Carlo Integration Using Ratio Control Variates

Haolin Lu, Delio Vicini, Wesley Chang, and Tzu-Mao Li

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2025)

Best Paper Award

Variance reduction techniques for Monte Carlo integration are typically designed for scalar-valued integrands, even though many rendering and inverse rendering tasks actually involve vector-valued integrands. We show that ratio control variates, compared to conventional difference control variates, can significantly reduce the error of vector-valued integration with minimal overhead.

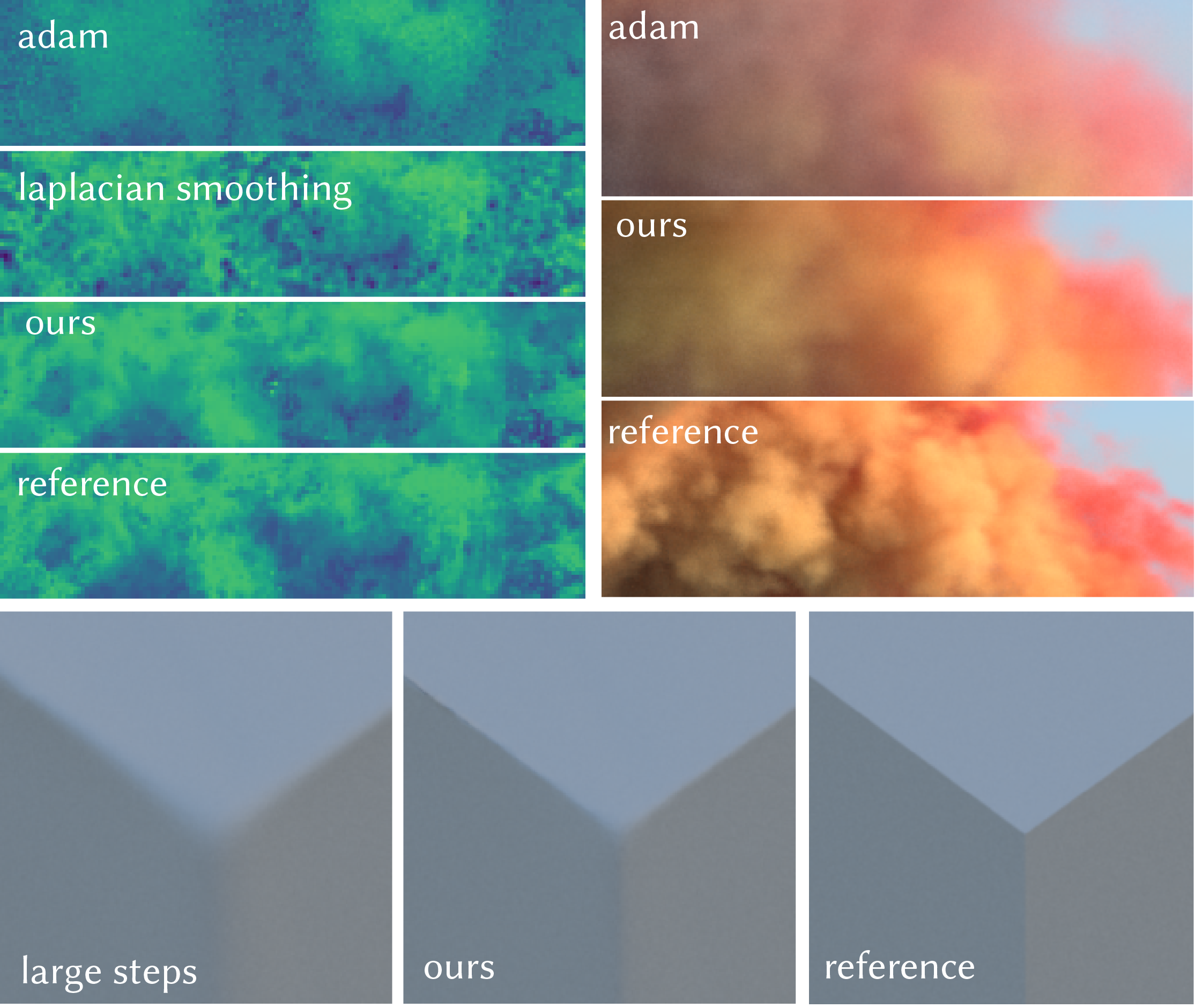

Spatiotemporal Bilateral Gradient Filtering for Inverse Rendering

Wesley Chang*, Xuanda Yang*, Yash Belhe*, Ravi Ramamoorthi, and Tzu-Mao Li

*Denotes equal contribution

SIGGRAPH Asia 2024 (Conference Track)

We introduce a spatiotemporal optimizer for inverse rendering which combines the temporal filtering of Adam with spatial cross-bilateral filtering to enable higher quality reconstructions in texture, volume, and geometry recovery.

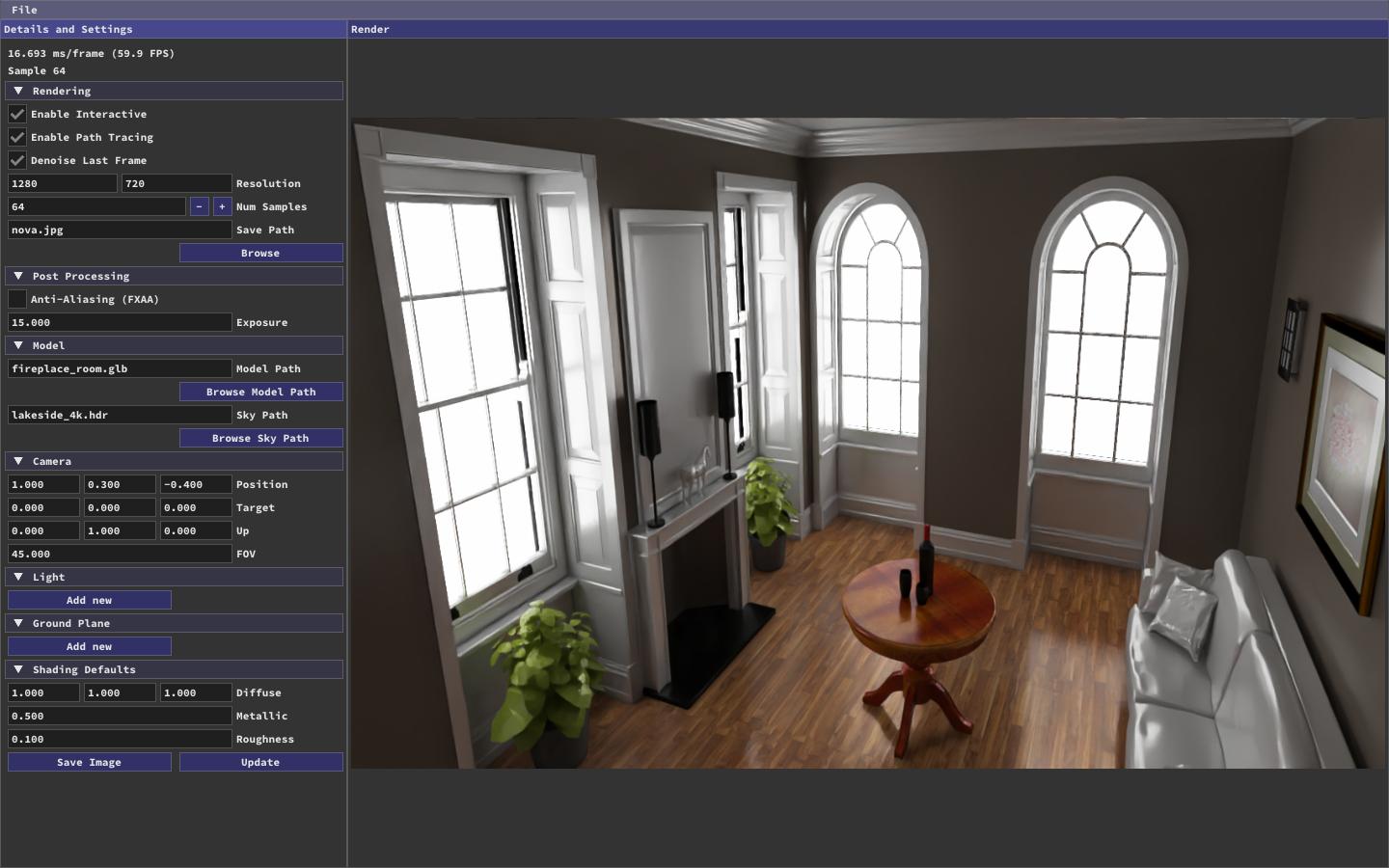

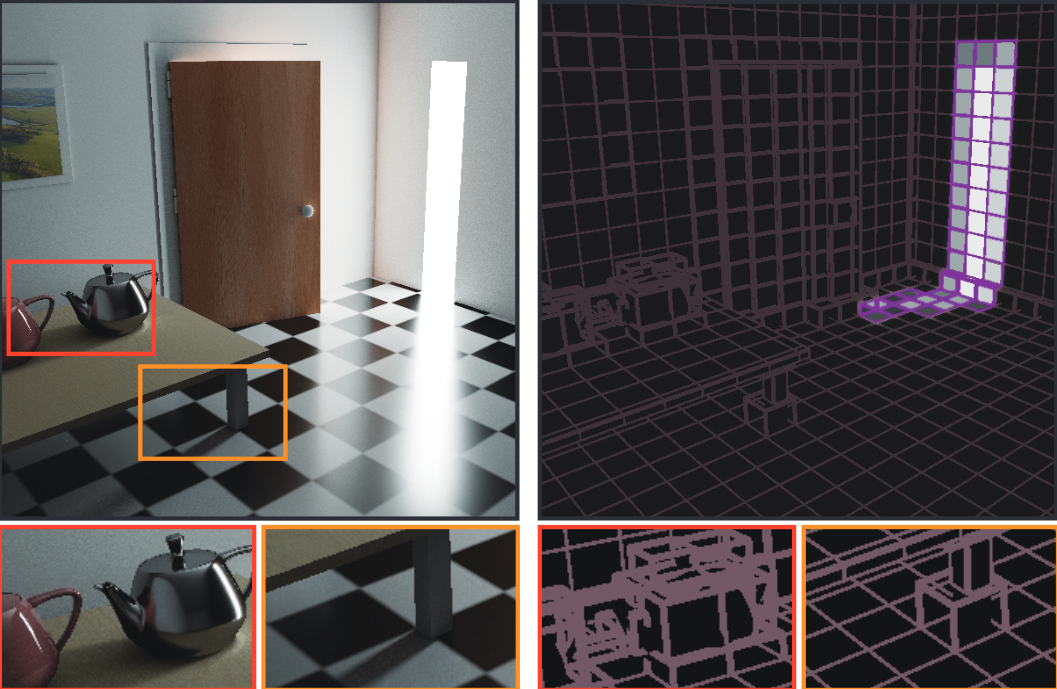

Real-Time Path Guiding Using Bounding Voxel Sampling

Haolin Lu, Wesley Chang, Trevor Hedstrom, and Tzu-Mao Li

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2024)

We propose a real-time path guiding method, Voxel Path Guiding (VXPG), that significantly improves fitting efficiency under limited sampling budget. We show that our method can outperform other real-time path guiding and virtual point light methods, particularly in handling complex dynamic scenes.

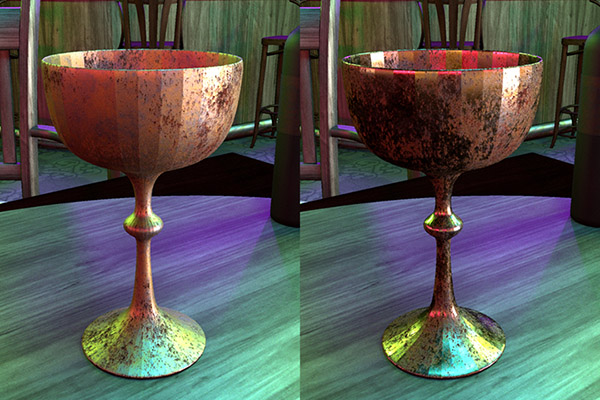

Parameter-space ReSTIR for Differentiable and Inverse Rendering

Wesley Chang, Venkataram Sivaram, Derek Nowrouzezahrai, Toshiya Hachisuka, Ravi Ramamoorthi, and Tzu-Mao Li

SIGGRAPH North America 2023 (Conference Track)

Physically-based inverse rendering algorithms that utilize differentiable rendering typically use gradient descent to optimize for scene parameters such as materials and lighting. We observe that the scene often changes slowly from frame to frame during optimization, similar to animation, and therefore adapt temporal reuse from ReSTIR to reuse samples across gradient iterations and accelerate inverse rendering.

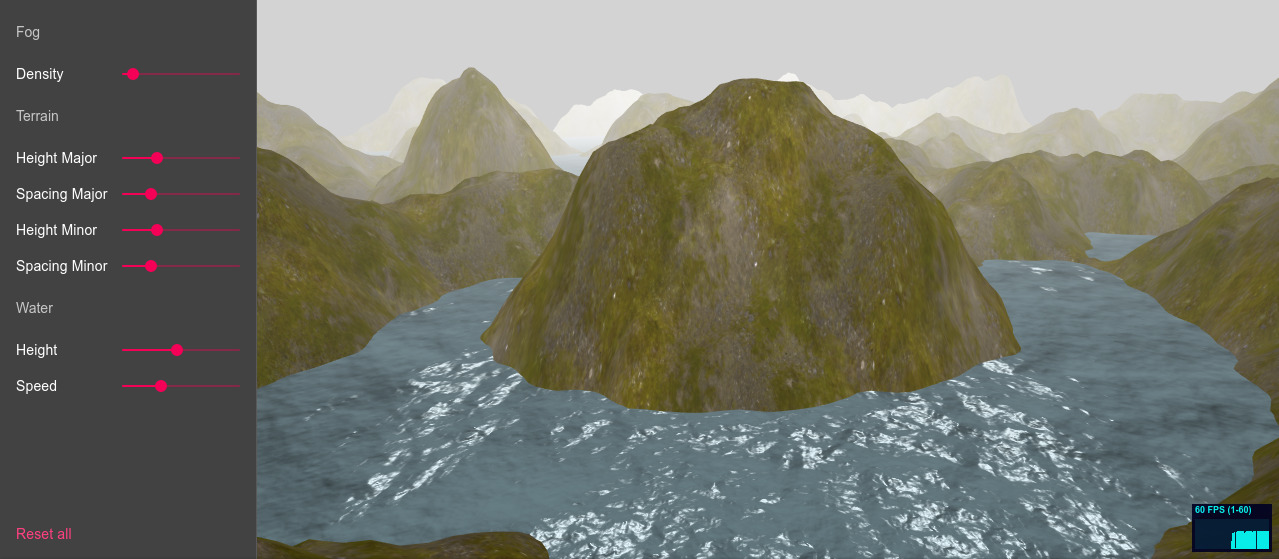

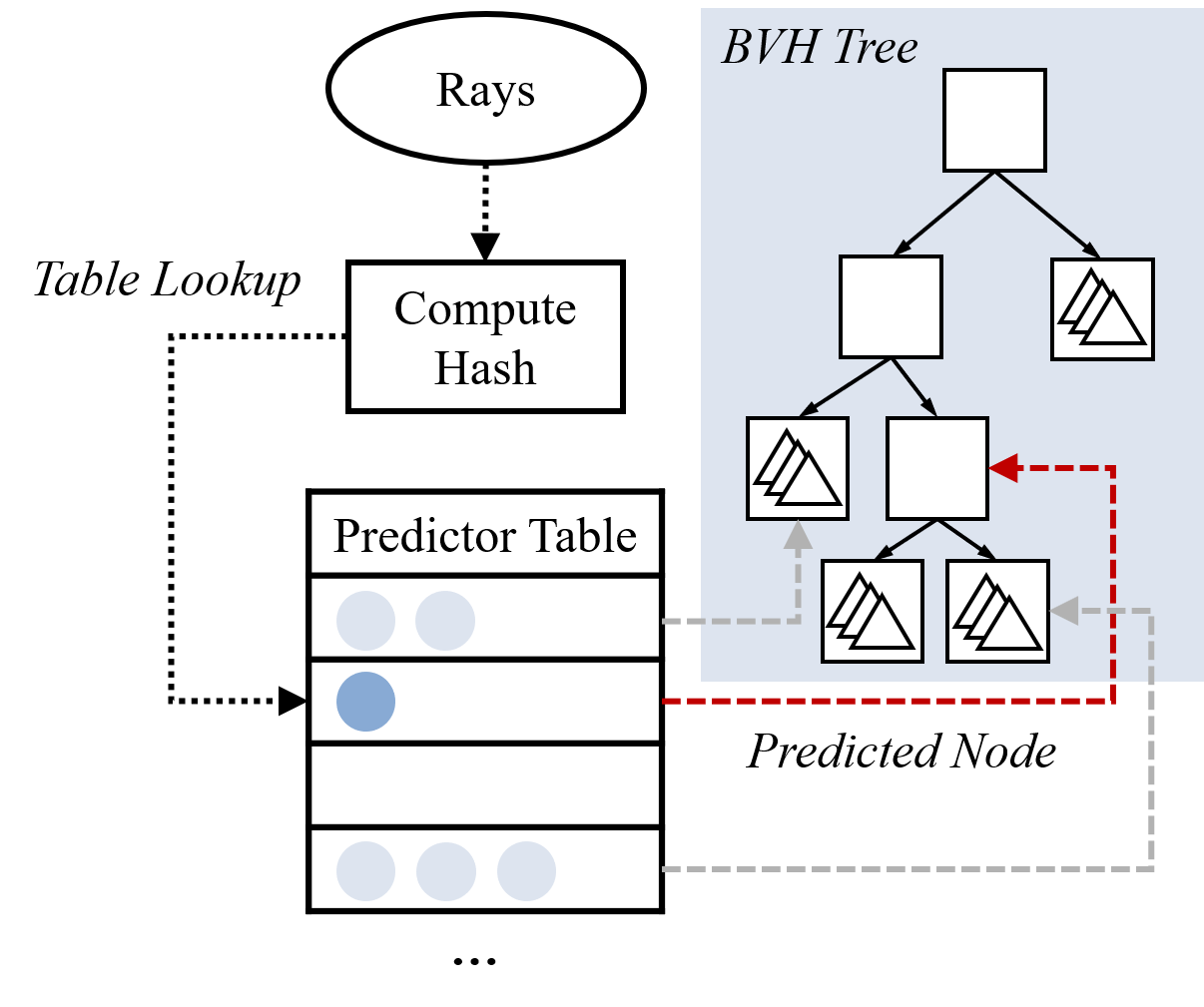

Intersection Prediction for Accelerated GPU Ray Tracing

Lufei Liu, Wesley Chang, Francois Demoullin, Yuan Hsi Chou, Mohammadreza Saed, David Pankratz, Tyler Nowicki, and Tor M. Aamodt

54th IEEE/ACM International Symposium on Microarchitecture (MICRO), 2021

Recent Graphics Processing Units (GPUs) incorporate hardware accelerator units designed for ray tracing. These accelerator units target the process of traversing hierarchical tree data structures used to test for ray-object intersections. We propose a ray intersection predictor that speculatively elides redundant operations during this process and proceeds directly to test primitives that the ray is likely to intersect.